Today I'd like to discuss something essential to algorithms that every coder should know Big-O Notation. Upon learning Big-O Notation what I now understand why I and every programmer should have in their arsenal. I'll be talking about the very basics of what it is and be demonstrating some basic examples.

What is Big-O Notation

To put it generally Big-O Notation is a simplified way to analyze how efficient the algorithm is. It doesn't do it based on the computer you're running the code on, it's based on the computational steps in your algorithm and the complexity in terms of the input size. It accounts for both how efficient the algorithm is not only in terms of time but also in terms of the amount of memory it takes to process. Big O is typically viewed in terms of worst-case scenario meaning it's accounting for the longest time it could take the code to run. It's used that way because it's used to analyze how inefficient your code could be.

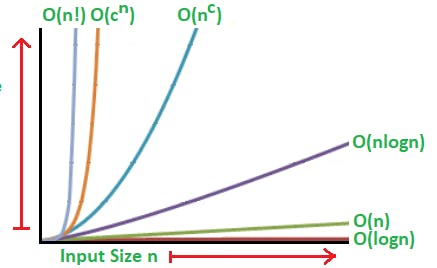

Now let's talk a little bit about its syntax. There are two general rules when it comes to how you represent the tier of Big-O notation your code runs at. One is we always declare it at the highest order of term it runs at. The tiers in order are:

O(1) < O(logn) < O(n) < O(nlogn) < O(n^2) < 0(2^n) < O(n!)

So if your code has some complexity at the O(n) but also O(n^2) we would simply classify at the higher order of O(2^n).

Another rule when it comes to syntax is we don't declare any constants. So even if you have 2n in your computation we will still declare it as O(n) not O(2n). The reason behind that is Big O is an analysis of your codes efficiency in terms of super large scales so that constant becomes negligible compared to say an exponent.

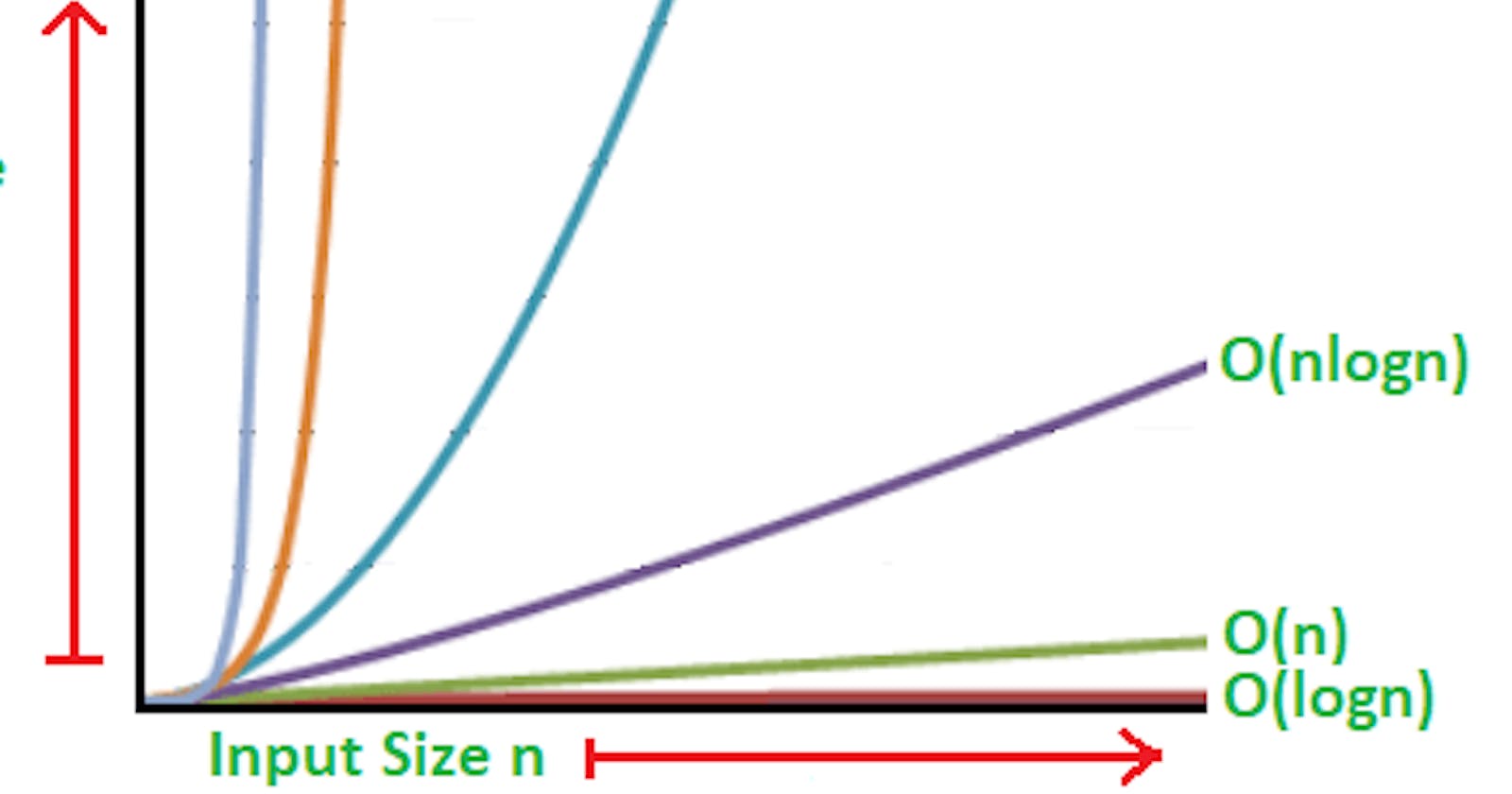

Here's a basic chart to visualize the efficiency in Big O:

Now let's check out a few basic examples.

O(1): Constant Time

The absolute quickest and most efficient form of Big O is O(1) which is constant meaning the amount of time it takes to run it is constant no matter the amount of data in the set. An example of this is looking up an item of an array with a given index.

const outputFirstIndex = (array) => {

console.log(array[0))

}

No matter how large the array you put into the function it runs at the same efficiency.

O(n): Linear Time

Another efficient form of Big O is going to be linear time. It grows linearly with the amount of data you input. Iterating over an array once is considered linear. The larger your array the longer it will take to iterate over each item proportionately.

const outputEachIndex = (array) => {

for(let i = 0; i < array.length; i++) {

console.log(array[i]);

}

for(let i = 0; i < array.length; i++){

console.log(i);

}

}

In that example I'm running two for loops. One will log each element in the array and the other will log each index of the array. So that computational process is 2n, it is running through each item n in the array twice. So our Big O would then be O(n) because remember we drop the constants.

O(n^2): Quadratic Time

Here's where things get considerably slower in time complexity.

const nestingLoops = (array) => {

for(let i = 0; i < array.length; i ++){

for(let j = 0; j < array.length; j++){

console.log(i, j);

}

}

}

When you nest a loop inside of a loop it is iterating over the array by itself squared effectively. For each index it also iterates over itself again. If your array has 5 elements it will effectively iterate 25 times. If it has 100 elements it will iterate 1000 times. That's why you start seeing considerable slowdown at this level of time complexity. The larger the set of data it becomes slower much more to compute.

Why it matters

These are just some of the most basic forms of Big O notation that are easy to digest and visibly see what time complexity means. Why does it matter to know where your code lands? Well if you create functional code but its functioning in Quadratic Time or even higher then that code is inefficient and could bog down anything relying on it. We use Big O notation to see how efficient our solution is and if there is any way to improve it. It's important to know that your code not only functions but it functions well.

That'll be it from me today. As always, thanks for checking this post out and happy coding.